Five Stages of Technology Sophistication

Much has been said about the transformative effect that technology (to be more precise, computer software) has had on various industries. But the effect is not new; nor is the transformation anywhere close to being complete. Looking across industries, we can notice themes. Specifically, as industries evolve, while the degree to which they utilize technology at a particular moment in time varies (for example, today marketing has at its disposal a much more sophisticated set of tools and technology practices than mortgages do) they seemed to embrace technology along the same predefined path. I've isolated five distinct stages, described below.

Stage One: Pre-technology

Industries usually begin as human endeavors. There are, of course, exceptions, most notably industries that were made possible in large part thanks to technology (such as bioengineering), but even today, surrounded by the abundance of tools and services that make it easy (so to speak) for the masses to technologize their efforts, we are fundamentally, as a species, not technology-literate the way we are capable of speaking or writing. Walk into any household or a company. Technology is relegated to technology-builders, and everyone else does everything but. And true, there are pockets where these proportions are out of whack, but even a technically-sophisticated companies like Google boast an order more non-technology than technology solutions.

On one hand, this is not surprising. Technology–and especially software–is very different from other human-adopted systems, and one of its biggest strengths is, in my view, also the reason why it's so hard for everyone to adopt. It's just so damn precise. There is very little if at all redundancy built in. Computer programs are recipes that are rigid like rail lines–they very explicitly move state from A to B under very specific conditions. Computer languages are compact (only a handful of keywords) and highly grammatical; but expressiveness suffers. Try replacing one character in your Ruby or Java code and your program will likely not compile. This means that to be truly technology literate, one has to have a rather seriously mathematical and systematic mind, capable of not just creating abstract representations of objects (which every brain does all the time, quietly) but also able to describe these representations very explicitly. This is why attempts to make programming "user-friendly" by using graphical diagramming-type metaphors or WYSIWYG controls always falls short of expectations.

On the other hand, I can't imagine this state of things persisting much longer (in a grander scale of things... say a century or so). But that's a topic of conversation for another time.

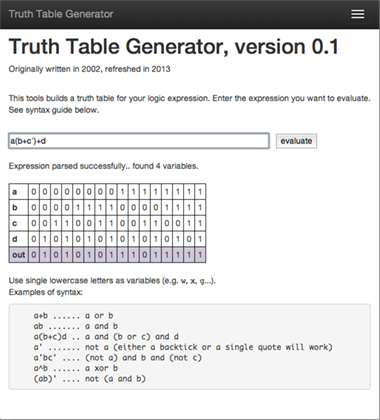

Stage Two: Local technology

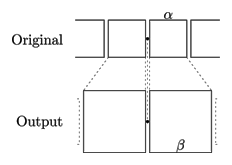

As industries focus on systematization and cost-cutting, technology is usually brought in. This takes a very specific shape: I call it local technology to denote solutions that usually help individuals, or at most solve very small, specific problems. Part of why local technology is the earliest stage in such an evolution is historical: computers were only connected in the early 80s, while software existed much before then. But local technology is easier to get one's head around, and, most importantly, it doesn't violate interfaces: as a worked in company X, with local technology, your inputs and outputs didn't change; you still interacted with the same people and had the same responsibilities. Technology simply made you more efficient.

My recently favorite industry, mortgages and mortgage origination, illustrates this stage perfectly well. The complexity of the use cases (the completion of a mortgage application file) quickly outgrew human processes and the systematization took advantage of technology of the 80s, which was local technology. Each of the "stations" in the backend – loan officers, processors, underwriters – optimized their environment, but the interface remained because technology couldn't strongly connect the stations. The industry became deeply entrenched with this form of technology, so much so that it's only relatively recently that we're beginning to see a transformation from heavily optimized individual stations to optimized systems that include all stations (and perhaps redefine the stations altogether).

Stage Three: Connected technology

Easily the most exciting and enabling stage in the evolution of any industry. Of course, we are now living in the Renaissance of Connectedness: not a day goes by without me learning about a new problem space being "disrupted" by technology, which really just means a company successfully innovating on a business model to take advantage of the existence of the omnipresent and all-so-connecting Internet. That's exactly what Uber, Airbnb, Facebook, Twitter, Dropbox, Spotify, and dozens of other large private tech companies (can we really call them startups anymore?) are doing. Late 1990s saw a flurry of activity, but the environment wasn't quite ripe for true connectedness (made possible with near-universal broadband and then mobile data). Truly connected technology had to wait for mid-to-late 2000s and there's still a lot to connect.

Stage Four: Analysis and insight

The connecting power of technology simply cannot be underestimated. It will continue its run until the majority of industries are shaken up and redefined by the notion of connectedness. But the value of technology doesn't end here. Most industries, once connected, then turn to data and to the understanding of what's behind the data. Except in limited cases, analytics and insight only become tremendously more useful once the data from all connected endpoints are pooled and analyzed. That's a big idea behind the somewhat hype'y term Big Data.

Analysis and insight are a frontier beyond just connectedness. In search for further efficiency, industries must begin to understand the processes they put in place (via local technology) and connected (via connected technology). And while we hear a lot of companies whose competitive edge lies in analytics, the aforementioned Big Data slogan just won't die, Data Scientists are now the most sought-after professionals, we are only at the very beginning of useful analytics.

Stage Five: Artificial Intelligence

No matter how sophisticated, analytics is just as good as the heuristics that humans can think of or the trends that naturally emerge. In a quest for insight beyond that, we turn to technology's ultimate promise: one of emulating human intelligence. While there are a handful of companies that can boast truly artificially intelligent systems (some that come to mind are IBM, Google's computers hidden deep in the company's basements somewhere and processing facts under Peter Norvig's careful watch and perhaps Tesla), the solution space is nowhere near as "toolified": local technology has applications going back to early computers, connected technology has the Web, analysis and insight has machine learning and data visualization, but Artificial Intelligence is really mostly kept in the realm of research papers and some companies' secret projects.

***

What is the significance of these five stages and what can we learn from this taxonomy? First of all, understanding a particular industry's evolution, overlaid on top of the evolution of software helps us understand the challenges and opportunities inherent in that industry. If an industry grew tremendously before connected technology became reality, the existing players are likely plagued by legacy local technology with a modicum of connective tissue. If an industry just seems to have become connected (the transformation from on-premise to SaaS solutions, for example for an enterprise space), an opportunity to look out for will likely come in the insights that are now possible with the proliferation of data. Finally, it's useful to distinguish between analytics and intelligence, even though we still have ways to go before we see a wave of new "disruptions" which will really be business model innovations stemming from the fact that we will be able to near-infinitely scale human intelligence.