Business plans never survive first contact with customers, [so] get out of the building, talk to your customers, and iterate [until] you see their pupils dilate!

– Steve Blank

One of the best things about Stanford is that you get to interact with some great people. I had the pleasure of taking a class taught by Steve Blank. The class was called Lean Launchpad and it was an inverted-classroom lab where teams of four people worked on their startup ideas. The only requirement was that teams had to follow the lean methodology: form hypotheses about the various elements of their business (such as customer segments, the value proposition of their company, the revenue model, etc.) and run tests to either confirm or reject these hypotheses. If you haven't heard about the lean startup, you should really read up on it. You also needed to test hypotheses primarily by talking to customers, not by doing market research or sitting and thinking about them. The main guiding principle is that evidence for your hypotheses cannot have originated in your head.

And so in Lean Launchpad, 90% of the work happened "outside the building" – not in the classroom or at home, but in the field, interviewing potential customers. With 10 meaningful customer interviews per week for 10 weeks, the class was exhausting, but – as Steve was quick to point out several times – it's only a tiny preview of the workload, the intensity and the emotional roller coaster of what having an actual startup will subject you to.

I say "the pleasure of taking the class" for a couple of reasons. First, Steve Blank does not filter. He says it as he sees it, he does not wrap criticism in niceties. He will interrupt you mid-sentence. Some find it too abrasive, but increasingly having to deal with people who rarely say what they think and often fluff things out, I found it refreshing. I felt like I learned a lot more once I was out there, vulnerable, facing the Wrath of Blank after I failed to talk to enough customers.

Secondly, I have come to cherish experiences in my life that have, over a relatively short time period, made me truly internalize a concept, and Lean Launchpad with its guiding principles of lean startup and customer-driven development was one of them. Prior to taking the class, I would have told you "Sure, it's important to talk to customers". After all, everything that Steve Blank talks about makes sense, and as commonsensical, I would dismiss the teachings as something that I was already doing. But what I had done was merely intellectualize his point. When it came to actually work on my venture idea, I would forget the point and go back to my old ways.

What were these old ways? I would short-circuit talking to customers, believing that I know ex ante what the customer would say. I would avoid thinking about the hypotheses implicit in my business ideas, and even if I did write them down, I would avoid testing them out of fear of being wrong. I was also being lazy, and avoided talking to customers because talking to customers is uncomfortable (at least at first), hard and takes too much time.

Even when I did eventually talk to customers, I realized that I wasn't really doing a good job. I wasn't listening. You know how sometimes you talk to someone, and when they speak you politely nod but all you're thinking of is what you're going to say next? That's what I was doing. I would also often skillfully steer the conversation in a direction that may confirm my assumptions, leading the customer in a direction I wanted him or her to go, rather than learning new information.

Finally, in avoid talking to customers, I used an excuse – a sneaky, dangerous excuse that I often hear people use. It was that "Some ideas just don't lend themselves well to customer development". I would pontificate how the iPhone would never have been invented if Steve Jobs talked to customers.

Steve Blank helped me realize what it means to talk to customers well. Afterwards, I wrote up my experiences:

The problem is that there are good ways of talking to customers, and bad ways of talking to customers. There is a kind of art to interviewing people in a way that lets you extract meaningful information that avoids many of the common biases that as a founder you will likely be prone to. Such biases are generally a manifestation of a very simple problem: we are by nature egotistical. We care about our idea, our product more than someone else's pain. We fall in love with our product and subconsciously, we lead with questions that avoid the possibility of uncovering uncomfortable truth about our product or the need we believe in. We also constrain our customer to give us meaningless feedback about something specific, rather than offering valuable feedback about something broader.

To avoid this mistake, don't start with the product. And definitely don't start by showing the product to the customer. When I did that, instead of getting creative, insightful comments that correspond to actual need, I "Wow"ed the customers and got very superficial feedback. You see, when you start with a product (especially the visualization of the product) rather than establishing a need first, you're forcing the customer to adopt the role of your product's user. This means that your customer will make lots of assumptions about what the product does, what it's for, and how it should be used. You're, in effect, increasing the distance between your customer and her need. You're also turning your customer into a refiner who'll try to respond at a granular level to what's in front of her. You're numbing her creative and discriminating facilities because you've given her something very tangible to respond to. Finally, you're preventing yourself from learning about your customer. Maybe if you had understood more about your customer's needs (and pains in their everyday job), you would be able to come up with a much better painkiller.

Instead, I like to start very generally. What does the customer do every day? How does she do it? What is working and what isn't? What are the biggest pains? In an ideal world, what would she not need to be going? How would she want to be doing her jobs? Once I establish this broad landscape, I zoom in, focusing on the big needs and pains (which should hopefully be aligned with what your product does – if not, explore them more before turning your customer's attention away from her biggest pain and towards your solution).

Finally, be present in the interview. It's tough to do, because we want the conversation to go somewhere. We want to manufacture insight. And insight can't be manufactured. If a customer wants to tell you a story, let him do it. If a customer seems more interested in what's in your opinion a minor aspect of your product, listen.

Only when I've internalized – and I mean truly internalized – the above (which means erring on the side of customer development and hypothesis-driven approach even if it didn't feel efficient, or useful), did I allow myself to step back and think about the limitations of the Blankian Imperative. For it's not a panacea, suffers from its own biases, and should not be indiscriminately applied to everything.

First, I encourage you to separate hypothesis-driven development from the lean startup model. In lean startup, you want to fail fast. Failing fast gives you momentum and is a good forcing mechanism to avoid going down rabbit holes (and it also makes for good stories and makes it possible to have a class like Lean Launchpad). But failing fast also means that you run the risk of never committing to something you believe in. This may manifest itself as you setting a low bar for rejecting a hypothesis. Many of my friends in the class would drop a hypothesis after talking to just one person. In theory, failing fast is not a big problem because all you're looking for is one true positive, and so it's better to stumble across a false positive than miss a false negative. But if you fail fast, you may miss the really big problems waiting to be solved, but disguised as noise.

In fact, you can run a full hypothesis-driven process with customer discovery but not fail fast. This means that your hypotheses take a little longer to prove or disprove. If you don't have a problem motivating yourself (and don't need constant momentum to keep you going), these "slower" tests won't pose a threat to your startup.

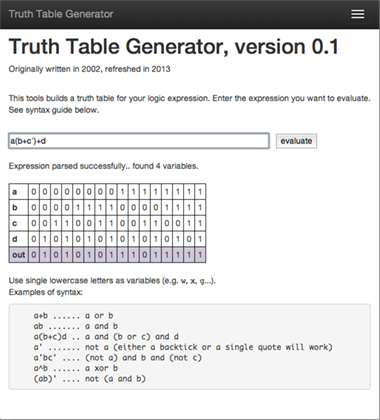

Secondly, I would choose a well-run intuitive process to a poorly-run hypothesis-driven process any day. I've seen a number of teams that think they are going down a good road only because they followed the process. Usually, this manifests itself as vaguely-phrased hypotheses (or statements that don't really sound like hypotheses: a hypothesis is a belief, an assumption that can be rejected in the presence of appropriate evidence), tests that act like checklists of hypotheses ("Oh, I've heard the customer mention this, so check!") and rejections/acceptances that simply add the checks up.

Once you decide you want to be hypothesis-driven, think of your startup like a scientist would. Are your hypotheses falsifiable? Would two reasonable people agree on whether particular evidence rejects a particular hypothesis? Do the tests provide strong evidence? It may help to help a part of the team try to keep the hypothesis and another part of the team try to kill it.

Finally, yes, you might be thinking of a product that unearths new demand, as was the case with an iPhone. Customer development is much more difficult in a case like that, because the pains are likely horizontal (i.e. lots of little seemingly unrelated pains that are all solved with your product), and muted (because your customers are so used to dealing with the world without your product that they came to terms with its pains). But all this means is that you have to be smarter and more creative about your customer interviews. Don't hand an iPhone (or whatever equivalent you've envisioned) to your customer, asking what pains it would solve. Your customer would likely not know, even though he might be wowed by the gadget. Instead, observe your customer go through their daily lives, do the workflows that you think your product might address, and take notes. I'm a big fan of shadowing people – following your customer for a day may seem like the most inefficient way to get information, but sometimes it's really the only reliable way of getting information that didn't originate in your head.